Summary

Most of deep learning

algorithms are supervised, requiring a large amount of paired

input-output data to train the parameters (e.g. DNN weights) in the

learning systems. Such data are often very expensive to acquire in many

practical applications. Unsupervised learning is aimed to eliminate the

use of such costly training data in learning the system parameters, and

is expected to become a new driving force for the future breakthroughs

in artificial intelligence (AI) applications.

The key to

successful unsupervised learning is to intelligently exploit rich

sources of world knowledge and prior information, including inherent

statistical structures of input and output, nonlinear (bi-directional)

relations between input and output (both inside and outside the

application domains), and distributional properties of input/output

sequences. In this keynote, I will present a set of recent experiments

on unsupervised learning in sequential classification tasks. The novel

unsupervised learning algorithm to be described, inspired by concepts

from cryptography research, carefully explores the statistical structure

in output sequences, and is shown to achieve classification accuracy

comparable to the fully supervised system.

Short-bio

Li Deng recently joined

Citadel, one of the most successful investment firms in the world, as

its Chief AI Officer. Prior to Citadel, he was Chief Scientist of AI and

Partner Research Manager at Microsoft. Prior to Microsoft, he was a

tenured Full Professor at the University of Waterloo in Ontario, Canada

as well as teaching/research at MIT (Cambridge), ATR (Kyoto, Japan) and

HKUST (Hong Kong). He is a Fellow of the IEEE, a Fellow of the

Acoustical Society of America, and a Fellow of the ISCA. He has been

Affiliate Professor at University of Washington (since 2000).

He

was on Board Governors of the IEEE Signal Processing Society and of

Asian-Pacific Signal and Information Processing Association (2008-2010).

He was Editors-in-Chief of IEEE Signal Processing Magazine (2009-2011)

and IEEE/ACM Transactions on Audio, Speech, and Language Processing

(2012-2014), for which he received the IEEE SPS Meritorious Service

Award. He received the 2015 IEEE SPS Technical Achievement Award for his

'Outstanding Contributions to Automatic Speech Recognition and Deep

Learning' and numerous best paper and scientific awards for the

contributions to artificial intelligence, machine learning, multimedia

signal processing, speech and human language technology, and their

industrial applications. (https://lidengsite.wordpress.com/publications-books/)

Summary

Deep learning has made

great progress in a variety of language tasks. However, there are still

many practical and theoretical problems and limitations. In this talk I

will introduce solutions to some of these:

How to predict previously

unseen words at test time.

How to have a single input and output

encoding for words.

How to grow a single model for many tasks.

How

to use a single end-to-end trainable architecture for question

answering.

Short-bio

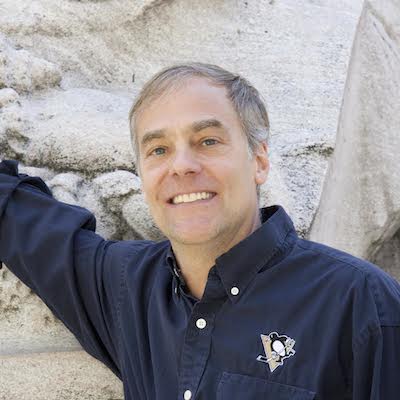

Richard Socher is Chief

Scientist at Salesforce where he leads the company's research efforts

and works on bringing state of the art artificial intelligence solutions

to Salesforce.

Prior to Salesforce, Richard was the CEO and founder

of MetaMind, a startup acquired by Salesforce in April 2016. MetaMind's

deep learning AI platform analyzes, labels and makes predictions on

image and text data so businesses can make smarter, faster and more

accurate decisions than ever before.

Richard was awarded the

Distinguished Application Paper Award at the International Conference on

Machine Learning (ICML) 2011, the 2011 Yahoo! Key Scientific Challenges

Award, a Microsoft Research PhD Fellowship in 2012, a 2013 'Magic Grant'

from the Brown Institute for Media Innovation, the 2014 GigaOM Structure

Award and is currently a member of the WEF Young Global Leaders Class of

2017.

Richard obtained his PhD from Stanford working on deep learning

with Chris Manning and Andrew Ng and won the best Stanford CS PhD thesis

award.

Summary

This course covers the

fundamentals of different deep learning architectures, which will be

explained through three types of mainstream applications, to image

processing, pattern recognition and computer vision. A range of network

architectures will be reviewed, including multi-layer perceptrons,

sparse auto-encoders, restricted Boltzmann machines, and convolutional

neural networks. These networks will be illustrated with applications in

three categories, each characterized by the type of output into which

the input image is transformed. Specifically, the categories are

characterized by:

i) Image to image transformation (e.g., image

denoising and colorization -- here the output is an image of the same

complexity as the input)

ii) Image to mid-level representation (e.g.,

image segmentation, contour detection -- here the output image is

compact as it depicts only certain succinct properties of the

image)

iii) Image to high-level representation (e.g., image

classification, object detection and face recognition -- here the output

is a description of the input image, confined to a small number of bits)

Syllabus

Basics and Network

Architectures:

1) Basic concepts from Probability and information

theory

2) Introduction to linear classifiers, logistic regression,

gradient descent and optimization

3) Neural networks and

backpropagation

4) Sparse auto-encoders and restricted Boltzmann

machines

5) Basics of convolutional neural networks

6) General

practices in model training

7) Deconvolution, ConvNet

visualization

8) Popular ConvNet architectures used in computer

vision.

Applications:

1) Image Denoising, Image

Colorization

2) Clustering, Image Segmentation, Contour

Detection

3) Image Classification, Object Detection, Face Recognition

References

[1] Christopher. M.

Bishop, Neural Networks for Pattern Recognition, 1995.

[2] Ian

Goodfellow, Yoshua Bengio and Aaron Courville, Deep Learning, MIT Press,

2017.

[3] Y. LeCun, B. Boser, J. S. Denker, D. Henderson, R. E.

Howard, W. Hubbard and L. D. Jackel, Backpropagation Applied to

Handwritten Zip Code Recognition, Neural Computation, 1989.

[4] A.

Krizhevsky, I. Sutskever and G. E. Hinton, Imagenet Classification with

Deep Convolutional Neural Networks, NIPS, 2012.

[5] Ross Girshick,

Jeff Donahue, Trevor Darrell and Jitendra Malik, Rich Feature

Hierarchies for Accurate Object Detection and Semantic Segmentation,

CVPR, 2014.

[6] Yaniv Taigman, Ming Yang, Marc'Aurelio Ranzato

and Lior Wolf, DeepFace: Closing the Gap to Human-Level Performance in

Face Verification, CVPR, 2014.

[7] Jonathan Long, Evan Shelhamer and

Trevor Darrell, Fully Convolutional Networks for Semantic Segmentation,

CVPR, 2015.

[8] E Ergul, N Arica, N. Ahuja and S. Erturk, Clustering

Through Hybrid Network Architecture With Support Vectors, IEEE Trans. on

Neural Network and Learning Systems, 2016.

[9] Richard Zhang, Phillip

Isola, Alexei A. Efros. Colorful Image Colorization, ECCV, 2016.

Pre-requisites

Linear Algebra and

Calculus, Probability and Statistics.Basics of Image Processing, Pattern

Recognition and Computer Vision

Short-bio

Narendra

Ahuja is Research Professor at the University of Illinois at

Urbana-Champaign Dept. of Electrical and Computer Engineering, Beckman

Institute, and Coordinated Science Laboratory, and Founding Director of

Information Technology Research Academy, Ministry of Electronics and

Information Technology, Government of India. He received B.E. with

honors in electronics engineering from Birla Institute of Technology and

Science, Pilani, India, M.E. with distinction in electrical

communication engineering from Indian Institute of Science, Bangalore,

India, and Ph.D. in computer science from University of Maryland,

College Park, USA. In 1979, he joined the faculty of the University of

Illinois where he was Donald Biggar Willet Professor of Engineering

until 2012. During 1999-2002, he served as the Founding Director of

International Institute of Information Technology, Hyderabad, India. He

has co-authored the books Pattern Models (Wiley), Motion and Structure

from Image Sequences (Springer-Verlag), and Face and Gesture Recognition

(Kluwer). He awards include: Emanuel R. Piore award of the IEEE,

Technology Achievement Award of the International Society for Optical

Engineering, and TA Stewart-Dyer/Frederick Harvey Trevithick Prize of

the Institution of Mechanical Engineers; and with his students, best

paper awards from International Conferences on Pattern Recognition

(Piero Zamperoni Award, etc.), Symposium on Eye Tracking Research and

Applications, IEEE International Workshop on Computer Vision in Sports

and IEEE Transactions on Multimedia. He has received 4 patents. His

algorithms and prototype systems have been used by about 10

companies/other organizations. He is a fellow of IEEE, AAAI, IAPR, ACM,

AAAS and SPIE.

This course will be offered in collaboration with

Dr. Jagannadan Varadarajan, Research Scientist, Advanced Digital

Sciences Center, Singapore. Dr. Jagannaddan received his Ph.D. in

computer science from EPFL, Switzerland in 2012. His interests include

computer vision and machine learning.

Summary

The process of learning is

essential for building natural or artificial intelligent systems. Thus,

not surprisingly, machine learning is at the center of artificial

intelligence today. And deep learning--essentially learning in complex

systems comprised of multiple processing stages--is at the forefront of

machine learning. The lectures will provide an overview of neural

networks and deep learning with an emphasis on first principles and

theoretical foundations. The lectures will also provide a brief

historical perspective of the field. Applications will be focused on

difficult problems in the natural sciences, from physics, to chemistry,

and to biology.

Syllabus

1: Introduction and

Historical Background. Building Blocks. Architectures. Shallow Networks.

Design and Learning.

2: Deep Networks. Backpropagation.

Underfitting, Overfitting, and Tricks of the Trade.

3: Two-Layer

Networks. Universal Approximation Properties. Autoencoders.

4:

Learning in the Machine. Local Learning and the Learning Channel.

Dropout. Optimality of BP and Random BP.

5: Convolutional Neural

Networks. Applications.

6: Recurrent Networks. Hopfield model.

Boltzmann machines.

7: Recursive and Recurrent Networks. Design and

Learning. Inner and Outer Approaches.

8: Applications to

Physics.

9: Applications to Chemistry.

10: Applications to

Biology.

Pre-requisites

Basic algebra,

calculus, and probability at the introductory college level. Some

previous knowledge of machine learning could be useful but it not

required.

Short-bio

Pierre Baldi earned MS

degrees in Mathematics and Psychology from the University of Paris, and

a PhD in Mathematics from the California Institute of Technology. He is

currently Chancellor's Professor in the Department of Computer Science,

Director of the Institute for Genomics and Bioinformatics, and Associate

Director of the Center for Machine Learning and Intelligent Systems at

the University of California Irvine. The long term focus of his research

is on understanding intelligence in brains and machines. He has made

several contributions to the theory of deep learning, and developed and

applied deep learning methods for problems in the natural sciences such

as the detection of exotic particles in physics, the prediction of

reactions in chemistry, and the prediction of protein secondary and

tertiary structure in biology. He has written four books and over 300

peer-reviewed articles. He is the recipient of the 1993 Lew Allen Award

at JPL, the 2010 E. R. Caianiello Prize for research in machine

learning, and a 2014 Google Faculty Research Award. He is and Elected

Fellow of the AAAS, AAAI, IEEE, ACM, and ISCB.

Summary

The abundance of devices

with cameras and numerous real-world application scenarios for AI and

autonomous robots create an increasing demand for human-level visual

perception of complex scenes. Hierarchical convolutional neural networks

have a long and successful history for learning pattern recognition

tasks in visual perception. They extract increasingly complex features

by local, convolutional computations, and create invariances to

transformations by pooling operations. In recent years, advances in

parallel computing, such as the use of programmable GPUs, the

availability of large annotated image and video data sets, and advances

in deep learning methods yielded dramatic progress in visual perception

performance. The course will cover motivations for deep convolutional

networks from visual statistics and visual cortex, feed-forward networks

for image categorization, object detection, and object-class

segmentation, recurrent architectures for visual perception, 3D

perception, and spatial transformations in convolutional networks.

State-of-the-art examples from computer vision and robotics will be used

to illustrate the approaches.

Syllabus

1. Motivation of deep

convolutional networks by image statistics

2. Biological

background: Visual cortex

3. Feed-forward convolutional networks for

image categorization

4. Object detection

5. Semantic

segmentation

6. Recurrent convolutional networks for video

processing

7. 3D perception

8. Spatial image transformations

References

[1] Y. LeCun, L.

Bottou, Y. Bengio, P. Haffner: Gradient-based learning applied to

document recognition. Proceedings of the IEEE 86(11):2278-2324, 1998

[2] S. Behnke: Hierarchical neural networks for image interpretation,

LNCS 2766, Springer, 2003.

[3] D. Scherer, A. C. Müller, S. Behnke:

Evaluation of pooling operations in convolutional architectures for

object recognition. ICANN, 2010.

[4] D Scherer, H Schulz, S Behnke:

Accelerating large-scale convolutional neural networks with parallel

graphics multiprocessors. ICANN, 2010.

[5] H Schulz, S Behnke:

Learning Object-Class Segmentation with Convolutional Neural Networks,

ESANN, 2012.

[6] R. Memisevic: Learning to Relate Images. IEEE

Trans. Pattern Anal. Mach. Intell. 35(8): 1829-1846, 2013.

[7] J.

Schmidhuber: Deep learning in neural networks: An overview. Neural

Networks 61: 85-117, 2015.

[8] M Schwarz, H Schulz, S Behnke: RGB-D

object recognition and pose estimation based on pre-trained

convolutional neural network features. ICRA, 2015.

[9] I.

Goodfellow, Y. Bengio, A. Courville: Deep learning, MIT Press, 2016.

[10] M. S. Pavel, H. Schulz, S. Behnke: Object class segmentation of

RGB-D video using recurrent convolutional neural networks. Neural

Networks 88:105-113, 2017.

[11] M. Schwarz, A. Milan, A.S.

Periyasamy, S. Behnke: RGB-D object detection and semantic segmentation

for autonomous manipulation in clutter. International Journal of

Robotics Research, 2017.

[12] J. Dai, H.i Qi, Y. Xiong, Y. Li, G.

Zhang, H.Hu, Y. Wei: Deformable Convolutional Networks.

arXiv:1703.06211, 2017

Pre-requisites

Basic knowledge of

neural networks, image processing, and machine learning

Short-bio

Sven Behnke is professor

for Autonomous Intelligent Systems at University of Bonn, Germany. He

received a MS degree in Computer Science in 1997 from

Martin-Luther-Universität Halle-Wittenberg and has been investigating

deep learning since. In 1998, he proposed a hierarchical recurrent

convolutional neural architecture – Neural Abstraction Pyramid – for

which he developed unsupervised methods for learning feature hierarchies

and supervised training for computer vision tasks like superresolution,

image reconstruction, semantic segmentation, and object detection. In

2002, he obtained a PhD in Computer Science on the topic Hierarchical

Neural Networks for Image Interpretation from Freie Universität Berlin.

He spent the year 2003 as postdoctoral researcher at the International

Computer Science Institute, Berkeley, CA. From 2004 to 2008, Sven Behnke

headed the Humanoid Robots Group at Albert-Ludwigs-Universität Freiburg.

His research interests include deep learning and cognitive robotics.

Summary

There has been a surge of

opportunities for the development of deep learning algorithms &

platforms for advanced vision systems and smart robots to operate

indoors (in messy living environments), underwater, and in the air (with

drones). This has been boosted by the availability of high performance

computing, large amounts of visual data (big data), and the recent

introduction of new sensors (e.g., 3D video sensors). These systems will

reduce the expensive costs associated with elder's health and home

care expenses, and enhance competitiveness in agriculture & marine

economies. This lecture will give a brief introduction to Computer

Vision, then provides a detailed cover of Artificial neural networks,

and focus on two main deep learning networks, namely Convolutional

Neural Networks (CNNs), and Auto-encoders and their applications in the

development of vision systems.

Syllabus

Session 1: In this

session we will address the following: what is computer vision; feature

extraction and classification; why deep learning (engineered features vs

learned features); importance of sensing, high performance computing,

and big data for deep learning; image understand and ultimate goal of

computer vision.

Session 2: Artificial Neural Networks

basics: artificial neuron characteristics (activation function); types

of architectures (feed-forward networks vs. recurrent networks); types

of learning rules.

Session 3: Feed-forward networks and their

training: Single Layer Perceptron (SLP), Multi-layer Perceptron (MLP),

and back-propagation.

Session 4: Deep learning and why

training is difficult with more layers, and how to solve it (how to

train and debug large-scale and deep multi-layer neural networsk).

Session 5: Convolutional Neural Networks & variants (with

tools & libraries), and their application to computer vision.

Session 6: Auto-Encoders and their application to computer

vision

References

Artificial Neural

Network basics:

[1] M. Bennamoun, Lecture notes on Slideshare:

https://www.slideshare.net/MohammedBennamoun/presentations

[2]

Richard Lippmann “An Introduction to Computing with Neural Nets”, IEEE

ASSP Magazine, April 1997.

[3] Richard Lippmann, “Pattern

Classification using Neural Networks”, IEEE Communication Magazine,

November 1989.

[4] Anil Jain, Jianchang Mao, and K.M. Mohiuddin,

“Artificial Neural Networks: A Tutorial”, IEEE Computer Magazine, Vol.

29, Issue 3, March 1996.

[5] L. Fausett, “Fundamentals of Neural

Networks”, Prentice-Hall, 1994.

[6] J.M. Zurada, “Introduction to

Artificial Neural Systems”, West Publishing Company, 1992.

Deep

learning:

[7] H. Larochelle, Y. Bengio, J. Louradour, and P.

Lamblin, “Exploring Strategies for Training Deep Neural Networks”,

Journal of Machine Learning Research, 2009.

[8] Y. Bengio, “Learning

Deep Architectures for AI”, Foundations and Trends in Machine Learning,

2009.

CNN:

[9] Y. LeCun, L. Bottou, G. Orr, and K.-R. Mller,

“Efficient backprop,” in Neural Networks: Tricks of the Trade. New York,

NY, USA: Springer, 2012, vol. 7700, pp. 9–48.

[10] S. H Khan, M.

Bennamoun, F. Sohel, and R. Togneri, “Automatic shadow detection and

removal from a single image”, IEEE transactions on pattern analysis and

machine intelligence, Vol. 38(3), 2016.

Auto-encoders:

[11] M

Hayat, M Bennamoun, S An, “Deep reconstruction models for image set

classification”, Vol. 37 (4), 2015.

Pre-requisites

Basic knowledge of

linear algebra, and statistics

Short-bio

Mohammed Bennamoun is

currently a W/Professor at the School of Computer Science and Software

Engineering at The University of Western Australia. He lectured in

robotics at Queen's, and then joined QUT in 1993 as an associate

lecturer. He then became a lecturer in 1996 and a senior lecturer in

1998 at QUT. In January 2003, he joined The University of Western

Australia as an associate professor. He was also the director of a

research center from 1998-2002. He is the co-author of the book Object

Recognition: Fundamentals and Case Studies (Springer-Verlag, 2001). He

has published close to 100 journals and 250 conference publications. His

areas of interest include control theory, robotics, obstacle avoidance,

object recognition, artificial neural networks, signal/image processing,

and computer vision. More information is available on his website.

This course will be delivered with the help of Dr. H. Rahmani

& Dr. S.A. Shah:

Syed Afaq Ali Shah obtained his PhD from

the University of Western Australia (UWA) in the area of 3D computer

vision. He currently works as a research associate at UWA. His research

interests include deep learning, 3D face/object recognition, 3D

modelling and image segmentation.

Hossein Rahmani completed

his PhD from The University of Western Australia. He has published

several papers in conferences and journals such as CVPR, ECCV, and

TPAMI. He is currently a Research Fellow in the School of Computer

Science and Software Engineering at The University of Western Australia.

His research interests include computer vision, action recognition, 3D

shape analysis, and machine learning.

Summary

Applied Deep Learning for

Visual Computing, Autonomous Vehicles, and Gaming

Syllabus

Research and development

at NVIDIA focuses on applying deep learning to key application areas for

visual computing:

- self-driving cars

- inside-out tracking for

VR/AR

- text recognition

- gesture recognition and analysis

- smart cameras

- video gaming

- robotics

Building these

systems requires a mix of deep learning techniques, distributed systems,

efficient GPU computing, combined with traditional methods from computer

vision, image processing, and statistical decision theory.

My

lectures will focus on techniques and models that are important for

building such systems, but that we find (in our interviews) are often

not covered well in academic deep learning courses.

Part 1:

Review of deep networks for computer vision applications. Connections

between deep learning algorithms, classical machine learning, and

signal/image processing. Bayesian decision theory and modular neural

networks.

Part 2: From model-based approaches to deep learning.

Scene text and document recognition as model-based computer vision;

statistical models of context; deep learning variants; single

dimensional and multi-dimensional recurrent neural networks. Style

modeling. Large scale semi-supervised and unsupervised learning.

Part 3: Optical flow, texture segmentation, semantic segmentation. An

overview of classical models and image formation. The use of synthetic

data and style transfer for synthetic training. Current deep-learning

based approaches. Multidmensional LSTMs for segmentation

problems.

References

Goodfellow, Ian, Yoshua

Bengio, and Aaron Courville. Deep learning. MIT press, 2016.

Strang,

Gilbert, and Kaija Aarikka. Introduction to applied mathematics. Vol.

16. Wellesley, MA: Wellesley-Cambridge Press, 1986.

Pre-requisites

Participants should

have basic familiarity with deep learning, including common

architectures and training methods.

Short-bio

Thomas Breuel works on

deep learning and computer vision at NVIDIA Research, with applications

to self-driving cars, gaming, and image/video analysis. Prior to NVIDIA,

he was a full professor of computer science at the University of

Kaiserslautern (Germany) and worked as a researcher at Google, Xerox

PARC, the IBM Almaden Research Center, IDIAP, Switzerland, as well as a

consultant to the US Bureau of the Census. He is an alumnus of the

Massachusetts Institute of Technology and Harvard University.

Summary

For the purposes of this

short course, behaviors are the dynamics exhibited by systems over time.

There is growing interest and success in applying various types of

machine learning techniques to the modeling and learning of human,

natural and machine generated behaviors. This course will review a

variety of behavior modeling approaches and provide a tutorial on

classical, recurrent neural network and recurrent deep learning methods

for learning behaviors. Application areas will include social behaviors,

computer systems behaviors for security purposes and as well as other

domains.

Syllabus

- Overview of behavior

modeling and application domains

- Goals of and metrics for behavior

learning

- Recurrent neural networks and long short term memory

(LSTM)

- Software implementations and hands on exercises

(TensorFlow, Keras)

- Comparisons with classical methods

References

Pre-requisites

Familiarity with

numerical linear algebra, probability and statistics, machine learning

basics.

Short-bio

George Cybenko is the

Dorothy and Walter Gramm Professor of Engineering at Dartmouth.

Professor Cybenko has made research contributions in signal processing,

neural computing, parallel computing and computational behavioral

analysis. He was the Founding Editor-in-Chief of IEEE/AIP Computing in

Science and Engineering, IEEE Security & Privacy and IEEE Transactions

on Computational Social Systems. Professor Cybenko is a Fellow of the

IEEE, received the 2016 SPIE Eric A. Lehrfeld Award for poutstanding

contributions to global homeland security and the US Air Force

Commander's Public Service Award. He obtained his BS (Toronto) and PhD

(Princeton) degrees in Mathematics. He has held visiting appointments at

MIT, Stanford and Leiden University where we has the Kloosterman

Visiting Distinguished Professor. Cybenko is co-founder of Flowtraq Inc

(http://www.flowtraq.com) which focuses on commercial software and

services for large-scale network flow security and analytics.

Summary

The course will cover the

primary exact and approximate algorithms for reasoning with

probabilistic graphical models (e.g., Bayesian and Markov networks,

influence diagrams, and Markov decision processes). We will present

inference-based, message passing schemes (e.g., variable elimination)

and search-based, conditioning schemes (e.g., cycle-cutset conditioning

and AND/OR search). Each algorithm class possesses distinct

characteristics and in particular has different time vs. space behavior.

We will emphasize the dependence of the methods on graph parameters such

as the treewidth, cycle-cutset, and (pseudo-tree) height. We will start

from exact algorithms and move to approximate schemes that are anytime,

including weighted mini-bucket schemes with cost-shifting and MCMC

sampling.

Syllabus

Class 1: Introduction and

Inference schemes

• Basic Graphical models

– Queries

–

Examples/applications/tasks

– Algorithms

overview

• Inference algorithms, exact

– Bucket elimination

– Jointree clustering

– Elimination orders

•

Decomposition Bounds

– Dual Decomposition,

GDD

– MBE/WMBE

– Belief Propagation

Class 2: Search

Schemes

• AND/OR search spaces, pseudo-trees

– AND/OR search trees

– AND/OR search graphs

– Generating good pseudo-trees

•

Heuristic search for AND/OR spaces

–

Brute-force traversal

– Depth-first AND/OR

branch and bound

– Best-first AND/OR

search

– The Guiding MBE heuristic

– Marginal Map (max-sum-product)

• Hybrids of search and Inference

Class 3:

• Variational methods

–

Convexity & decomposition bounds

–

Variational forms & the marginal polytope

–

Message passing algorithms

– Convex duality

relationships

• Monte Carlo sampling

– Basics

–

Importance sampling

– Markov chain Monte

Carlo

– Integrating inference and

sampling

References

Rina Dechter:

Reasoning with Probabilistic and Deterministic Graphical Models: Exact

Algorithms. Synthesis Lectures on Artificial Intelligence and Machine

Learning, Morgan & Claypool Publishers 2013

Alexander Ihler,

Natalia Flerova, Rina Dechter, and Lars Otten. "Join-graph based

cost-shifting schemes" in Proceedings of UAI 2012

Dechter, R.

and Rish, I., "Mini-Buckets: A General Scheme for Bounded

Inference" In "Journal of the ACM", Vol. 50, Issue 2:

pages 107-153, March 2003.

Robert Mateescu, Kalev Kask, Vibhav

Gogate, and Rina Dechter. "Join-Graph Propagation Algorithms."

JAIR'2009

Pre-requisites

Basic Computer

Science

Short-bio

Rina Dechter research

centers on computational aspects of automated reasoning and knowledge

representation including search, constraint processing, and

probabilistic reasoning. She is a professor of computer science at the

University of California, Irvine. She holds a Ph.D. from UCLA, an M.S.

degree in applied mathematics from the Weizmann Institute, and a B.S. in

mathematics and statistics from the Hebrew University in Jerusalem. She

is an author of Constraint Processing published by Morgan Kaufmann

(2003), and Reasoning with Probabilistic and Deterministic Graphical

Models: Exact Algorithms by Morgan and Claypool publishers, 2013, has

co-authored over 150 research papers, and has served on the editorial

boards of: Artificial Intelligence, the Constraint Journal, Journal of

Artificial Intelligence Research (JAIR), and Journal of Machine Learning

Research (JMLR). She is a fellow of the American Association of

Artificial Intelligence 1994, was a Radcliffe Fellow 2005–2006, received

the 2007 Association of Constraint Programming (ACP) Research Excellence

Award, and she is a 2013 ACM Fellow. She has been Co-Editor- in-Chief of

Artificial Intelligence since 2011. She is also co-editor with Hector

Geffner and Joe Halpern of the book Heuristics, Probability and

Causality: A Tribute to Judea Pearl, College Publications, 2010.

Alex Ihler is an associate professor of computer science at the

University of California, Irvine. He received his MS and PhD degrees

from the Massachusetts Institute of Technology in 2000 and 2005, and a

BS from the California Institute of Technology in 1998. His research

spans several areas of machine learning, with a particular focus on

probabilistic, graphical model representations, including Bayesian

networks, Markov random fields, and influence diagrams, and with

applications to domains such as sensor networks, computer vision, and

computational biology. He is the co-author of over 60 research papers,

and the recipient of an NSF CAREER award. He is the director of UC

Irvine's Center for Machine Learning, and has served on the editorial

boards of Machine Learning (MLJ), Artificial Intelligence (AIJ), and the

Journal of Machine Learning Research (JMLR).

Summary

Syllabus

Part I: Basics of Machine

Learning and Applications --- deep and shallow

- machine learning

founding principles

- machine learning and deep learning

-

shallow machine learning vs. deep machine learning

- taxonomy of

machine learning: a learning-paradigm perspective

- taxonomy of

speech, image, text, and multi-modal applications: a signal-processing

perspective

Part II: Deep Neural Networks (DNN): Why gradient

vanishes & how to rescue it

- history of neural nets for speech

recognition: why they failed

- one equation for backprop update ---

why gradients may easily vanish for DNN learning

- five ways of

rescuing gradient vanishing

- an alternative way of training DNN

(deep stacking net)

- recurrent nets: my experiments in 90s (for

speech) and current perspectives

Part III: How Deep Learning

Disrupted Speech (and Image) Recognition

- shallow models dominating

speech: 30+ years from 80s

- deep generative models for speech: 10

years of research before DNN disruption

- pros and cons of

generative vs discriminative models

- how speech is produced and

perceived by human: a comprehensive computational model

- several

theories of human perception

- variational inference/learning for

deep generative speech model (experiments late 90's to mid 2000)

- a

very different kind of deep generative model: deep belief nets

(2006)

- the arrival of DNN for speech and its early successes: a

historical perspective (2009-2011)

- more recent development of deep

learning for speech

- a perspective on recent innovations in speech

recognition

- how to do truly unsupervised learning for future

speech recognition (and other AI tasks)

Part IV: Deep Learning for

Text and Multi-Modal Processing

- AI to move from perception to

cognition: key roles of language/text

- concept of symbolic/semantic

embedding

- word and text embedding

- build text embedding on

top of sub-word units: practical necessity for many applications

-

distant supervised embedding

- deep structured semantic modeling

(DSSM)

- use of DSSM for multi-modal deep learning: Microsoft's

first generation image captioning system

- DSSM for contextual

search in Microsoft Office/Word

Part V: Limitations of Current Deep

Learning and How to Overcome Them

- Interpretability problem

-

Symbolic-neural integration for reasoning: tensor-product

representations

- How do labels come from: the need for unsupervised

learning via rich priors and self learning via interactions

-

Vertical applications

References

G. E. Hinton, R.

Salakutdinov. 'Reducing the Dimensionality of Data with Neural

Networks'.Science 313: 504–507, 2016.

G. E. Hinton, L. Deng, D. Yu,

etc. 'Deep Neural Networks for Acoustic Modeling in Speech Recognition:

The shared views of four research groups,' IEEE Signal Processing

Magazine, pp. 82–97, November 2012. (plus other papers in the same

special issue)

G. Dahl, D. Yu, L. Deng, A. Acero. 'Context-Dependent

Pre-Trained Deep Neural Networks for Large-Vocabulary Speech

Recognition'. IEEE Trans. Audio, Speech, and Language Processing, Vol

20(1): 30–42, 2012. (plus other papers in the same special issue)

Y.

Bengio, A. Courville, and P. Vincent. 'Representation Learning: A Review

and New Perspectives,' IEEE Trans. PAMI, special issue Learning Deep

Architectures, 2013.

J. Schmidhuber. 'Deep learning in neural

networks: An overview,' arXiv, October 2014.

Y. LeCun, Y. Bengio,

and G. Hinton. 'Deep Learning', Nature, Vol. 521, May 2015.

J.

Bellegarda and C. Monz. 'State of the art in statistical methods for

language and speech processing,' Computer Speech and Language, 2015.

Li Deng, Navdeep Jaitly. CHAPTER 1.2 Deep Discriminative and Generative

Models for Speech Pattern Recognition, in Handbook of Pattern

Recognition and Computer Vision (Ed. C.H. Chen), World Scientific, 2016,

Dong Yu and Li Deng, Automatic Speech Recognition – A Deep Learning

Approach, Springer, 2015.

Li Deng and Dong Yu, DEEP LEARNING —

Methods and Applications. NOW Publishers, June 2014.

Goodfellow,

Bengio, Courville. Deep Learning, MIT Press, 2016.

Li Deng and Yang

Liu (eds), Deep Learning in Natural Language Processing, Springer,

2017-2018.

Li Deng and Doug O’Shaughnessy, SPEECH PROCESSING — A

Dynamic and Optimization-Oriented Approach, Marcel Dekker Inc., June

2003.

Pre-requisites

Short-bio

Li Deng recently joined

Citadel, one of the most successful investment firms in the world, as

its Chief AI Officer. Prior to Citadel, he was Chief Scientist of AI and

Partner Research Manager at Microsoft. Prior to Microsoft, he was a

tenured Full Professor at the University of Waterloo in Ontario, Canada

as well as teaching/research at MIT (Cambridge), ATR (Kyoto, Japan) and

HKUST (Hong Kong). He is a Fellow of the IEEE, a Fellow of the

Acoustical Society of America, and a Fellow of the ISCA. He has been

Affiliate Professor at University of Washington (since 2000).

He

was on Board Governors of the IEEE Signal Processing Society and of

Asian-Pacific Signal and Information Processing Association (2008-2010).

He was Editors-in-Chief of IEEE Signal Processing Magazine (2009-2011)

and IEEE/ACM Transactions on Audio, Speech, and Language Processing

(2012-2014), for which he received the IEEE SPS Meritorious Service

Award. He received the 2015 IEEE SPS Technical Achievement Award for his

'Outstanding Contributions to Automatic Speech Recognition and Deep

Learning' and numerous best paper and scientific awards for the

contributions to artificial intelligence, machine learning, multimedia

signal processing, speech and human language technology, and their

industrial applications. (https://lidengsite.wordpress.com/publications-books/)

Summary

In this talk, I start with

a brief introduction to the history of deep learning and its application

to natural language processing (NLP) tasks. Then I describes in detail

the deep learning technologies that are recently developed for three

areas of NLP tasks. First is a series of deep learning models to model

semantic similarities between texts and images, the task that is

fundamental to a wide range of applications, such as Web search ranking,

recommendation, image captioning and machine translation. Second is a

set of neural models developed for machine reading comprehension and

question answering. Third is the use of deep learning for various of

dialogue agents, including task-completion bots and social chat bots.

Syllabus

Part 1. Introduction to

deep learning and natural language processing (NLP)

- A brief

history of deep learning

- An example of neural models for query

classification

- Overview of deep learning models for NLP tasks

Part 2. Deep Semantic Similarity Models (DSSM) for text processing

-

Challenges of modeling semantic similarity

- What is DSSM

- DSSM

for Web search ranking

- DSSM for recommendation

- DSSM for

automatic image captioning and other tasks

Part 3. Deep learning for

Machine Reading Comprehension (MRC) and Question Answering (QA) -

Challenges of MRC and QA

- A brief review of symbolic approaches

- From symbolic to neural approaches

- State of the art MRC

models

- Toward an open-domain QA system

Part 4. Deep learning

for dialogue

- Challenges of developing open-domain dialogue

agents

- The development of task-oriented dialogue agents using deep

reinforcement learning

- The development of neural conversation

engines for social chat bots

References

Part 1: Yih, He & Gao. Deep learning and continuous representations for natural language processing. Tutorial presented in HLT-NAACL-2015, IJCAI-2016.

Part 2 (DSSM): We have developed a series of deep semantic similarity models (DSSM, also a.k.a. Sent2Vec), which have been used for many text and image processing tasks, including web search [Huang et al. 2013, Shen et al. 2014], recommendation [Gao et al. 2014a], machine translation [Gao et al. 2014b], and QA [Yih et al. 2015].

Part 3 (MRC): We released a new MRC dataset, called MS MARCO; and have developed a series of reasoning networks for MRC, aka ReasoNet and ReasoNet with shared memory.

Part 4 (Dialogue): We have developed neural network models for social bots trained on Twitter data [project site] and task-completion bots [Lipton et al. 2016;Bhuwan et al. 2016] trained via deep reinforcement learning using a user simulator.

Pre-requisites

No prerequisites.

Short-bio

Jianfeng Gao is Partner Research Manager in Deep Learning Technology Center (DLTC) at Microsoft Research, Redmond. He works on deep learning for text and image processing and leads the development of AI systems for dialogue, machine reading comprehension (MRC), question answering (QA), and enterprise applications. From 2006 to 2014, he was Principal Researcher at Natural Language Processing Group at Microsoft Research, Redmond, where he worked on Web search, query understanding and reformulation, ads prediction, and statistical machine translation. From 2005 to 2006, he was a research lead in Natural Interactive Services Division at Microsoft, where he worked on Project X, an effort of developing natural user interface for Windows. From 1999 to 2005, he was Research Lead in Natural Language Computing Group at Microsoft Research Asia. He, together with his colleagues, developed the first Chinese speech recognition system released with Microsoft Office, the Chinese/Japanese Input Method Editors (IME) which were the leading products in the market, and the natural language platform for Windows Vista.

Summary

A confluence of new

artificial neural network architectures and unprecedented compute

capabilities based on numeric accelerators has reinvigorated interest in

Artificial Intelligence based on neural processing. Initial first

successful deployments in hyperscale internet services are now driving

broader commercial interest in adopting Deep learning as a design

principle for cognitive applications in the enterprise. In this class,

we will review hardware acceleration and co-optimized software

frameworks for Deep Learning, and discuss model development and

deployment to accelerate adoption of Deep Learning based solutions for

enterprise deployments

Syllabus

Session 1:

1. Hardware Foundations of the Great AI

Re-Awakening

2. Deployment models for DNN Training and

Inference

Session 2:

1. Optimized High Performance Training

Frameworks

2. Parallel Training Environments

Session

3:

1. Developing Models with Expressive Interfaces

2. Lab Demo

References

M. Gschwind, Need for

Speed: Accelerated Deep Learning on Power, GPU Technology Conference,

Washington DC, October 2016.

Pre-requisites

tbd.

Short-bio

Dr. Michael Gschwind is

Chief Engineer for Machine Learning and Deep Learning for IBM Systems

where he leads the development of hardware/software integrated products

for cognitive computing. During his career, Dr. Gschwind has been a

technical leader for IBM’s key transformational initiatives, leading the

development of the OpenPOWER Hardware Architecture as well as the

software interfaces of the OpenPOWER Software Ecosystem. In previous

assignments, he was a chief architect for Blue Gene, POWER8, and POWER7.

As chief architect for the Cell BE, Dr. Gschwind created the first

programmable numeric accelerator serving as chief architect for both

hardware and software architecture. In addition to his industry career,

Dr. Gschwind has held faulty appointments at Princeton University and

Technische Universität Wien. While at Technische Universität Wien, Dr.

Gschwind invented the concept of neural network training and inference

accelerators. Dr. Gschwind is a Fellow of the IEEE, an IBM Master

Inventor and a Member of the IBM Academy of Technology.

Summary

Human emotion is an

internal state of human brain which makes different decision and

behavior from same sensory inputs. Therefore, for efficient interactions

between human and machine, i.e. chatbot, it is important for the machine

to estimate human emotions. Due to the internal nature, the

classification accuracy of the emotion from a single modality is not

high. For example, our result was ranked as Top-1 with only 61.6%

accuracy for the emotion recognition task from facial images at

EmotiW2015 challenge. In this tutorial we will first present a

hierarchical Committee machine to win EmotiW2015 challenge, and further

extend the ideas to multi-modal classification with top-down attention

and identification of brain signals for the two other brain internal

states.

At the first talk (between 9:30 to 11:15 am), we

will start with our hierarchical committee machine of many deep neural

networks for the EmotiW2015 challenge. To take advantage of the

Committee it is important to recruit mutually-independent committee

members. For example, we had deployed 6 different pre-processing, 12

network architectures, and 3 random initializations. Also, 2-layer

hierarchical Committee was incorporated. In addition, we had extensive

utilization of data augmentation to win the EmotiW2015.

At the

second talk (between 2:30 to 4:15 pm), to improve the accuracy,

we will add speech and text modalities to recognize human emotions. We

will discuss how to combine those three modalities based on Early

Integration and Late Integration models. Also, we will introduce a new

integration method based on top-down attention. Although bottom-up

attention becomes quite popular for deep learning, top-down attention

had played critical roles for the recognition of noisy and superimposed

patterns, and will be further extended to multi-modal integration.

Actually, there exist much more top-down synapses than bottom-up

synapses in our brain.

At the third talk (between 11:45 am to

13:30 pm), we will introduce more general brain internal states,

of which popular one is emotion. Especially, we made hypothesis on 2

axes of the internal state space, i.e., agreement/disagreement and

trust/distrust to conversational counterparts during conversation, and

identified fMRI and/or EEG signal components related to those internal

states. Then, we will propose a method to utilize these brain signals

for generating near-ground-truth labels of brain internal states, which

will be used to train classifiers from audio-visual signals.

Syllabus

1. Hierarchical Committee

Machine for the Emotion Recognition in the Wild 2015

- A brief

history of emotion recognition

- Deep learning for emotion

recognition from facial images

- Learning facial representations for

emotion recognition

- Deep CNN architectures for emotion

recognition

- Hierarchical Committee Machine with many CNN

classifiers

2. Multi-modal Integration based on Top-Down

Attention

- Early Integration model with data concatenation

-

Late Integration model with committee machine

- Top-Down Attention

model with gated neural networks

3. Understanding Brain Internal

States with Brain Signals

- Agreement vs. Disagreement to others

during conversation

- Trust vs. Distrust to others during

conversation

- Known vs. Unknown for memory test

References

[Emotion

Recognition]

B.K. Kim, J. Roh, S.Y. Dong, S.Y. Lee, “Hierarchical

committee of deep convolutional neural networks for robust facial

expression recognition,” J Multimodal User Interfaces (2016)

10:173–189.

B.K. Kim, H. Lee, J. Roh, S.Y. Lee, “Hierarchical

Committee of Deep CNNs with Exponentially-Weighted Decision Fusion for

Static Facial Expression Recognition,” Proceedings of the 2015 ACM on

International Conference on Multimodal Interaction, pp. 427-434

(EmotiW2015 Challenge; Emotion Recognition in the Wild)

[Top-Down Attention for Multimodal Integration]

B.T. Kim and S.Y.

Lee, “Sequential Recognition of Superimposed Patterns with Top-Down

Selective Attention“, Neurocomputing, Vol. 58-60, pp. 633-640, 2004.

06.

C.H. Lee and S.Y. Lee, “Noise-Robust Speech Recognition Using

Top-Down Selective Attention with an HMM Classifier”, IEEE Signal

Processing Letters, Vol. 14, Issue 7, pp. 489-491, 2007. 07.

Ho-Gyeong Kim, Hwaran Lee, Geonmin Kim, Sang-Hoon Oh, and Soo-Young Lee

(in preparation, 2016).

[Classification of Brain Internal

States]

Suh-Yeon Dong, Bo-Kyeong Kim & Soo-Young Lee, “Implicit

agreeing/disagreeing intention while reading self-relevant sentences: A

human fMRI study”, Social Neuroscience, 2016, Vol. 11, No. 3, 221–232.

Suh-Yeon Dong, Bo-Kyeong Kim, Soo-Young Lee, “EEG-Based

Classification of Implicit Intention During Self-Relevant Sentence

Reading”, IEEE Transactions on Cybernetics, Vol. 46, No. 11, 2016, pp.

2535-2542.

un-Soo Jung, Dong-Gun Lee, Kyeongho Lee, Soo-Young Lee,

“Temporally Robust Eye Movements through Task Priming and

Self-referential Stimuli” (accepted in Scientific Reports)

Pre-requisites

No prerequisites.

Short-bio

Soo-Young Lee is a

professor of Electrical Engineering at Korea Advanced Institute of

Science and Technology. In 1997, he established the Brain Science

Research Centre at KAIST, and led Korean Brain Neuroinformatics Research

Program from 1998 to 2008. He is now also a Co-Director of Center for

Artificial Intelligence Research at KAIST, and leading Emotional

Dialogue Project, a Korean National Flagship Project. He is President of

Asia-Pacific Neural Network Society in 2017, and had received

Presidential Award from INNS and Outstanding Achievement Award from

APNNS. His research interests have resided in the artificial cognitive

systems with human-like intelligent behavior based on the biological

brain information processing. He has worked on speech and image

recognition, natural language processing, situation awareness,

internal-state recognition, and human-like dialog systems. Especially,

among many internal states, he is interested in emotion, sympathy,

trust, and personality. Both computational models and cognitive

neuroscience experiments are conducted. His group marked Top-1 for the

emotion recognition challenge from facial images (EmotiW; Emotion

Recognition in the Wild) in 2015.

Summary

Deep reinforcement

learning has enabled artificial agents to achieve human-level

performances across many challenging domains, e.g. playing Atari games

and Go. I will present several important algorithms including deep

Q-Networks and asynchronous actor-critic algorithms (A3C), DDPG, SVG,

guided policy search. I will discuss major challenges and promising

results towards making deep reinforcement learning applicable to real

world problems in robotics and natural language processing.

Syllabus

1. Introduction to

reinforcement learning (RL)

2. Value-based deep RL

Deep Q-learning (deep Q-Networks)

Programming assignment of deep Q-Networks in OpenAI Gym to

play Atari games

3. Policy-based deep RL

Policy

gradients

Asynchronous actor-critic algorithms (A3C)

Natural gradients and trust region optimization (TRPO)

Deep deterministic policy gradients (DDPG), SVG

4.

Model-based deep RL: Alpha Go and guided policy search

5. Deep

learning in multi-agent games: fictitious self-play

6. Inverse

RL

7. Transfer in RL

8. Frontiers

Application

to robotics

Application to natural language

understanding

References

Pre-requisites

Basic knowledge of

reinforcement learning, deep learning and Markov decision processes

Short-bio

Li Erran Li received his

Ph.D. in Computer Science from Cornell University advised by Joseph

Halpern. He is currently with Uber and an adjunct professor in the

Computer Science Department of Columbia University. Before that, He

worked as a researcher in Bell Labs. His research interests are AI, deep

learning, machine learning algorithms and systems. He is an IEEE Fellow

and an ACM Distinguished Scientist.

Summary

Deep learning is often

pitched as a general, universal solution to AI. The pitch promises that

with sufficient data, a generic neural architecture and learning

algorithm can perform end-to-end processing; it is not necessary to

understand the domain, engineer features, or specialize models. Although

this fantasy holds true in the limit of infinite data and infinite

computing cycles, bounds on either -- or on the quality or completeness

of data -- often make the promise of deep learning hollow. To overcome

limitations of data and computing, an alternative is to customize models

to characteristics of the domain. Much of the art of modern deep

learning is determining how to incorporate diverse forms of domain

knowledge into a model via its representations, architecture, loss

function, and data transformations. Domain-appropriate biases constrain

the learning problem and thereby compensate for data limitations. A

classic form of bias for vision tasks--used even before the invention of

back propagation--is the the convolutional architecture, exploiting the

homogeneity of image statistics and the relevance of local spatial

structure. Many generic tricks of the trade in deep learning can be cast

in this manner--as suitable forms of domain bias. Beyond these generic

tricks, I will work through illustrations of domains in which prior

knowledge can be leveraged to creatively construct models. My own

particular research interest involves cognitively-informed machine

learning, where an understanding of the mechanisms of human perception,

cognition, and reasoning can serve as a powerful constraint on models

that are intended to predict human preferences and behavior.

Syllabus

* The scaling

problem

* Bias-variance dilemma

* Imposing domain-appropriate

bias

- via loss functions

- via representations and

representational constraints

- via data augmentation

- via

architecture design

* Case studies of model crafting: memory in

humans and recurrent networks

References

Geman, S., Bienenstock,

E., & Doursat, R. (1992). Neural networks and the bias-variance dilemma.

Neural Computation, v. 4, n. 1, pp. 1-58.

Other references will be

provided during the lectures.

Pre-requisites

Basic background in

probability and statistics

Short-bio

Michael Mozer received a

Ph.D. in Cognitive Science at the University of California at San Diego

in 1987. Following a postdoctoral fellowship with Geoffrey Hinton at the

University of Toronto, he joined the faculty at the University of

Colorado at Boulder and is presently an Professor in the Department of

Computer Science and the Institute of Cognitive Science. He is secretary

of the Neural Information Processing Systems Foundation and has served

as chair of the Cognitive Science Society. He is interested both in

developing machine learning algorithms that leverage insights from human

cognition, and in developing software tools to optimize human

performance using machine learning methods.

Summary

Applications of Deep

Learning Models in Human-Computer Interaction Research

Syllabus

The opportunities for

interaction with computer systems are rapidly expanding beyond

traditional input and output paradigms: full-body motion sensors,

brain-computer interfaces, 3D displays, touch panels are now commonplace

commercial items. The profusion of new sensing devices for human input

and the new display channels which are becoming available offer the

potential to create more involving, expressive and efficient

interactions in a much wider range of contexts. Dealing with these

complex sources of human intention requires appropriate mathematical

methods; modelling and analysis of interactions requires sophisticated

methods which can transform streams of data from complex sensors into

estimates of human intention.

This tutorial will focus on the use of

inference and dynamical modelling in human-computer interaction. The

combination of modern statistical inference and real-time closed loop

modelling offers rich possibilities in building interactive systems, but

there is a significant gap between the techniques commonly used in HCI

and the mathematical tools available in other fields of computing

science. This tutorial aims to illustrate how to bring these

mathematical tools to bear on interaction problems, and will cover basic

theory and example applications from:

References

General basic

background in machine learning and interest in human-computer

interaction or information retrieval

Pre-requisites

tbc.

Short-bio

Roderick Murray-Smith is a

Professor of Computing Science at Glasgow University, leading the Inference, Dynamics and

Interaction research group, and heads the 50-strong Section on

Information, Data and Analysis, which also includes the Information

Retrieval, Computer

Vision & Autonomous systems and IDEAS Big Data groups. He

works in the overlap between machine learning, interaction design and

control theory. In recent years his research has included multimodal

sensor-based interaction with mobile devices, mobile spatial

interaction, AR/VR, Brain-Computer interaction and nonparametric machine

learning. Prior to this he held positions at the Hamilton Institute,

NUIM, Technical University of Denmark, M.I.T. (Mike Jordan’s lab), and

Daimler-Benz Research, Berlin, and was the Director of SICSA, the

Scottish Informatics and Computing Science Alliance (all academic CS

departments in Scotland). He works closely with the mobile phone

industry, having worked together with Nokia, Samsung, FT/Orange,

Microsoft and Bang & Olufsen. He was a member of Nokia's Scientific

Advisory Board and is a member of the Scientific Advisory Board for the

Finnish Centre of Excellence in Computational Inference Research. He has

co-authored three edited volumes, 29 journal papers, 18 book chapters,

and 88 conference papers.

Summary

The last 40 years have

seen a dramatic progress in machine learning and statistical methods for

speech and language processing like speech recognition, handwriting

recognition and machine translation. Most of the key statistical

concepts had originally been developed for speech recognition. Examples

of such key concepts are the Bayes decision rule for minimum error rate

and probabilistic approaches to acoustic modelling (e.g.hidden Markov

models) and language modelling. Recently the accuracy of speech

recognition could be improved significantly by the use of artificial

neural networks, such as deep feedforward multi-layer perceptrons and

recurrent neural networks (incl. long short-term memory extension). We

will discuss these approaches in detail and how they fit into the

probabilistic approach.

Syllabus

Part 1: Statistical

Decision Theory, Machine Learning and Neural Networks.

Part 2:

Speech Recognition (Time Alignment, Hidden Markov models, neural nets,

attention models)

Part 3: Machine Translation (Word Alignment,

Hidden Markov models, neural nets, attention models).

References

Bourlard, H. and

Morgan, N., Connectionist Speech Recognition - A Hybrid Approach, Kluwer

Academic Publishers, ISBN 0-7923-9396-1, 1994.

L. Deng, D. Yu: Deep

learning: methods and applications. Foundations and Trends in Signal

Processing, Vol. 7, No. 3–4, pp. 197-387, 2014.

P. Koehn:

Statistical Machine Translation, Cambridge University Press, 2010.

Pre-requisites

Familiarity with

linear algebra, numerical mathematics, probability and statistics,

elementary machine learning..

Short-bio

Hermann Ney is a full

professor of computer science at RWTH Aachen University, Germany. His

main research interests lie in the area of statistical classification,

machine learning, neural networks and human language technology and

specific applications to speech recognition, machine translation and

handwriting recognition. In particular, he has worked on dynamic

programming and discriminative training for speech recognition, on

language modelling and on machine translation. His work has resulted in

more than 700 conference and journal papers (h-index 87, 39000

citations; estimated using Google scholar). He and his team contributed

to a large number of European (e.g. TC-STAR, QUAERO, TRANSLECTURES,

EU-BRIDGE) and American (e.g. GALE, BOLT, BABEL) large-scale joint

projects. Hermann Ney is a fellow of both IEEE and ISCA (Int. Speech

Communication Association). In 2005, he was the recipient of the

Technical Achievement Award of the IEEE Signal Processing Society. In

2010, he was awarded a senior DIGITEO chair at LIMIS/CNRS in Paris,

France. In 2013, he received the award of honour of the International

Association for Machine Translation. In 2016, he was awarded an advanced

grant of the European Research Council (ERC).

Summary

Syllabus

I-Requisites for a

Cognitive Architecture

• Processing in space

• Processing in time

and memory

• Top down and bottom processing

• Extraction of

information from data with generative models

• Attention

II-

Putting it all together

• Empirical Bayes with generative models

• Clustering of time series with linear state models

•

Information Theoretic Autoencoders

III- Current work

•

Extraction of time signatures with kernel ARMA

• Attention Based

video recognition

• Augmenting Deep Learning with memory

References

Pre-requisites

Short-bio

Jose C. Principe is a

Distinguished Professor of Electrical and Computer Engineering at the

University of Florida where he teaches advanced signal processing,

machine learning and artificial neural networks (ANNs). He is Eckis

Professor and the Founder and Director of the University of Florida

Computational NeuroEngineering Laboratory (CNEL) www.cnel.ufl.edu. The

CNEL Lab innovated signal and pattern recognition principles based on

information theoretic criteria, as well as filtering in functional

spaces. His secondary area of interest has focused in applications to

computational neuroscience, Brain Machine Interfaces and brain dynamics.

Dr. Principe is a Fellow of the IEEE, AIMBE, and IAMBE. Dr. Principe

received the Gabor Award, from the INNS, the Career Achievement Award

from the IEEE EMBS and the Neural Network Pioneer Award, of the IEEE

CIS. He has more than 33 patents awarded over 800 publications in the

areas of adaptive signal processing, control of nonlinear dynamical

systems, machine learning and neural networks, information theoretic

learning, with applications to neurotechnology and brain computer

interfaces. He directed 93 Ph.D. dissertations and 65 Master theses. He

wrote in 2000 an interactive electronic book entitled 'Neural and

Adaptive Systems' published by John Wiley and Sons and more recently

co-authored several books on 'Brain Machine Interface Engineering'

Morgan and Claypool, 'Information Theoretic Learning', Springer, 'Kernel

Adaptive Filtering', Wiley and 'System Parameter Adaption: Information

Theoretic Criteria and Algorithms', Elsevier. He has received four

Honorary Doctor Degrees, from Finland, Italy, Brazil and Colombia, and

routinely serves in international scientific advisory boards of

Universities and Companies. He has received extensive funding from NSF,

NIH and DOD (ONR, DARPA, AFOSR).

Summary

This course will cover the

foundations of deep learning with its application to vision and text

understanding. Attendees will become familiar with two corner stone

concepts of most successful applications of deep learning today:

convolutional neural networks, and embeddings. The former is employed in

audio and visual processing applications, while the latter is used for

representing text, graphs and other discrete or symbolic data. Finally,

we are going to learn about how we can further extend these methods to

deal with sequential data, like videos. Lectures will provide

intuitions, the underlying mathematics, typical applications, code

snippets and references. By the end of these three lectures, attendees

are expected to gain enough familiarity to be able to apply these basic

tools to standard datasets on their own.

Syllabus

Session 1 - Deep Learning

for Vision and Audio Processing Applications

The basics: from logistic regression to fully connected neural networks,

and from fully connected neural networks to convolutional neural

networks (CNNs).

Special layers used in vision applications.

Example of CNNs using the pyTorch open source library.

How neural networks

can be adapted to work with discrete symbolic data like text: the

concept of embedding.

Methods using embeddings for a variety of

text application tasks.

Example of learning from text using

pyTorch.

Learning from sequences: Recurrent Neural Networks (RNNs).

How to

train and generate from RNNs, variants of RNNs.

Examples of

applications of RNNs using pyTorch.

Pre-requisites

Basic knowledge of

linear algebra, calculus, and statistics.

Short-bio

Marc’Aurelio Ranzato is

currently a research scientist at the Facebook AI Research lab in New

York City. He previously worked at Google in the Brain team from 2011 to

2013, and before that, he was a post-doctoral fellow in Machine

Learning, University of Toronto, with Geoffrey Hinton. He earned his

Ph.D. in Computer Science at New York University advised by Yann LeCun.

He is originally from Padova in Italy, where he graduated in Electronics

Engineering. Marc’Aurelio is interested in Machine Learning, Computer

Vision, Natural Language Processing and, more generally, Artificial

Intelligence. More specifically, he has worked on methods to learn

hierarchical representations of data, unsupervised learning and methods

for structured prediction. His research has been applied to visual

object recognition, face recognition, speech recognition, machine

translation, summarization and many other tasks. Marc’Aurelio has served

as Area Chair for several major conferences, like NIPS, ICML, CVPR, and

ICCV. He has been Senior Program Chair for ICLR 2017 and guest editor

for IJCV.

Summary

In recent years, deep

convolutional neural networks (CNNs) have been shown to deliver

excellent performance on a variety of object detection tasks, and much

has been made of how these CNNs have been inspired by insights into how

the brain performs vision. Foremost among these insights was the notion

of the brain’s visual system as a 'simple-to-complex' feedforward

hierarchy of interleaving pooling and template match stages. Yet, the

neuroscience underlying CNNs is more than 20 years old, and neuroscience

research since then using various brain imaging techniques and other

experimental and computational approaches have dramatically advanced our

understanding of how the brain recognizes objects and assigns meaning to

sensory stimuli. This course will review the traditional picture of

visual processing in the brain that underlies CNNs, in particular the

concept of a feedforward, 'simple-to-complex' hierarchy, and then

present new insights from neuroscience regarding flexibility in brain’s

processing hierarchy, including shortcuts, additional levels, feedback

signaling and interactions across different hierarchies that have

greatly impacted our understanding of how the brain can perform

ultra-fast object localization, learn new concepts by leveraging prior

learning, and learn in deep hierarchies.

Syllabus

Session 1: The basics:

Vision in the brain: Feedforward, simple-to-complex hierarchies

Session 2: New insights into deep hierarchies in brain: Deep, deeper and

shallower processing; re-entrant signals for learning and conscious

awareness.

Session 3: Learning across modalities: From objects to

words, audition, and touch.

References

Riesenhuber, M., &

Poggio, T. (2002). Neural Mechanisms of Object Recognition. Current

Opinion in Neurobiology 12: 162-168.

Pre-requisites

Some basic

neuroscience knowledge is helpful, as is having a brain.

Short-bio

Dr. Riesenhuber is

Director of the Laboratory for Computational Cognitive Neuroscience and

Professor of Neuroscience at Georgetown University Medical Center. His

research investigates the neural mechanisms underlying object

recognition and task learning in the human brain across sensory

modalities. Current research foci are ultra-rapid object localization,

leveraging prior learning, multi-tasking, and vibrotactile object

recognition and speech. The computational model at the core of his

research has been quite successful in elucidating the neural mechanisms

underlying robust invariant object recognition, contributing to

Technology Review Magazine’s naming him one of their TR100 in 2003, “the

100 people under age 35 whose contributions to emerging technologies

will profoundly influence our world.” Dr. Riesenhuber has received

several awards, including a McDonnell-Pew Award in Cognitive

Neuroscience and an NSF CAREER Award. He holds a PhD in computational

neuroscience from MIT.

Summary

The goal of the tutorial

is to introduce the recent and exciting developments of various deep

learning methods. The core focus will be placed on algorithms that can

learn multi-layer hierarchies of representations, emphasizing their

applications in information retrieval, data mining, collaborative

filtering, and computer vision.

Syllabus

The tutorial will be

split into two parts.

1: The first part will provide a gentle

introduction into graphical models, neural networks, and deep learning

models. Topics will include:

Unsupervised learning methods,

including autoencoders, restricted Boltzmann machines, and methods for

learning over-complete representations.

Supervised methods for deep

models, including deep convolutional neural network models and their

applications to text comprehension, data mining, image and video

analysis.

2: The second part of the tutorial will introduce more

advanced models, including Variational Autoencoders, Generative

Adversarial Networks, Deep Boltzmann Machines, and Recurrent Neural

Networks. We will also address mathematical issues, focusing on

efficient large-scale optimization methods for inference and

learning.

Throughout the tutorial, we will highlight applications of

deep learning methods in the areas of natural language processing,

reading comprehension, multimodal learning, collaborative filtering, and

image/video analysis.

References

https://simons.berkeley.edu/talks/tutorial-deep-learning

Pre-requisites

Basic knowledge of

probability, linear algebra, and introductory machine learning.

Short-bio

Ruslan Salakhutdinov

received his PhD in machine learning (computer science) from the

University of Toronto in 2009. After spending two post-doctoral years at

the Massachusetts Institute of Technology Artificial Intelligence Lab,

he joined the University of Toronto as an Assistant Professor in the

Department of Computer Science and Department of Statistics. In February

of 2016, he joined the Machine Learning Department at Carnegie Mellon

University as an Associate Professor.

Ruslan's primary interests lie

in deep learning, machine learning, and large-scale optimization. His

main research goal is to understand the computational and statistical

principles required for discovering structure in large amounts of data.

He is an action editor of the Journal of Machine Learning Research and

served on the senior programme committee of several learning conferences

including NIPS and ICML. He is an Alfred P. Sloan Research Fellow,

Microsoft Research Faculty Fellow, Canada Research Chair in Statistical

Machine Learning, a recipient of the Early Researcher Award, Connaught

New Researcher Award, Google Faculty Award, Nvidia's Pioneers of AI

award, and is a Senior Fellow of the Canadian Institute for Advanced

Research.

Summary

With the diffusion of

cheap sensors, sensor-equipped devices (e.g., drones), and sensor

networks (such as Internet of Things), as well as the development of

inexpensive human-machine interaction interfaces, the ability to quickly

and effectively process sequential data is becoming more and more

important. Many are the tasks that may benefit from advancements in this

field, ranging from monitoring and classification of human behavior to

prediction of future events. Many are the approaches that have been

proposed in the past to learn in sequential domains, ranging from linear

models to early models of Recurrent Neural Networks, up to more recent

Deep Learning solutions. The lectures will start with the presentation

of relevant sequential domains, introducing scenarios involving

different types of sequences (e.g., symbolic sequences, time series,

multivariate sequences) and tasks (e.g., classification, prediction,

transduction). Linear models are first introduced, including linear

auto-encoders for sequences. Subsequently non-linear models and related

training algorithms are recalled, starting from early versions of

Recurrent Neural Networks. Computational problems and proposed solutions

will be presented, including novel linear-based pre-training approaches.

Finally, more recent Deep Learning models will be discussed. Lectures

will close with some theoretical considerations on the relationships

between Feed-forward and Recurrent Neural Networks, and a discussion

about dealing with more complex data (e.g., trees and graphs).

Syllabus

1. Introduction to

sequential domains and related computational tasks

2. Linear models

for sequences

3. Linear auto-encoders for sequences: optimal and

approximated solutions